With AI, the financial bubble is easy to see: hyperscaler capex, a handful of firms carrying indices, and a narrative that capital has finally found a new frontier. The technical bubble is visible too: scaling laws, benchmark fever, and a faith that more compute will wash away old limitations. The cultural bubble is subtler. It’s not only hype or fear; it’s the sense that AI is about to rewire our shared life - the way we imagine, create, argue, govern, and even who “we” includes.

To end a series on bubbles with culture is to assert that technology is not simply a machine; it is a way of making worlds. Rail rearranged distance; electricity rearranged nights; the internet rearranged attention. AI’s cultural bubble invites one more rearrangement.

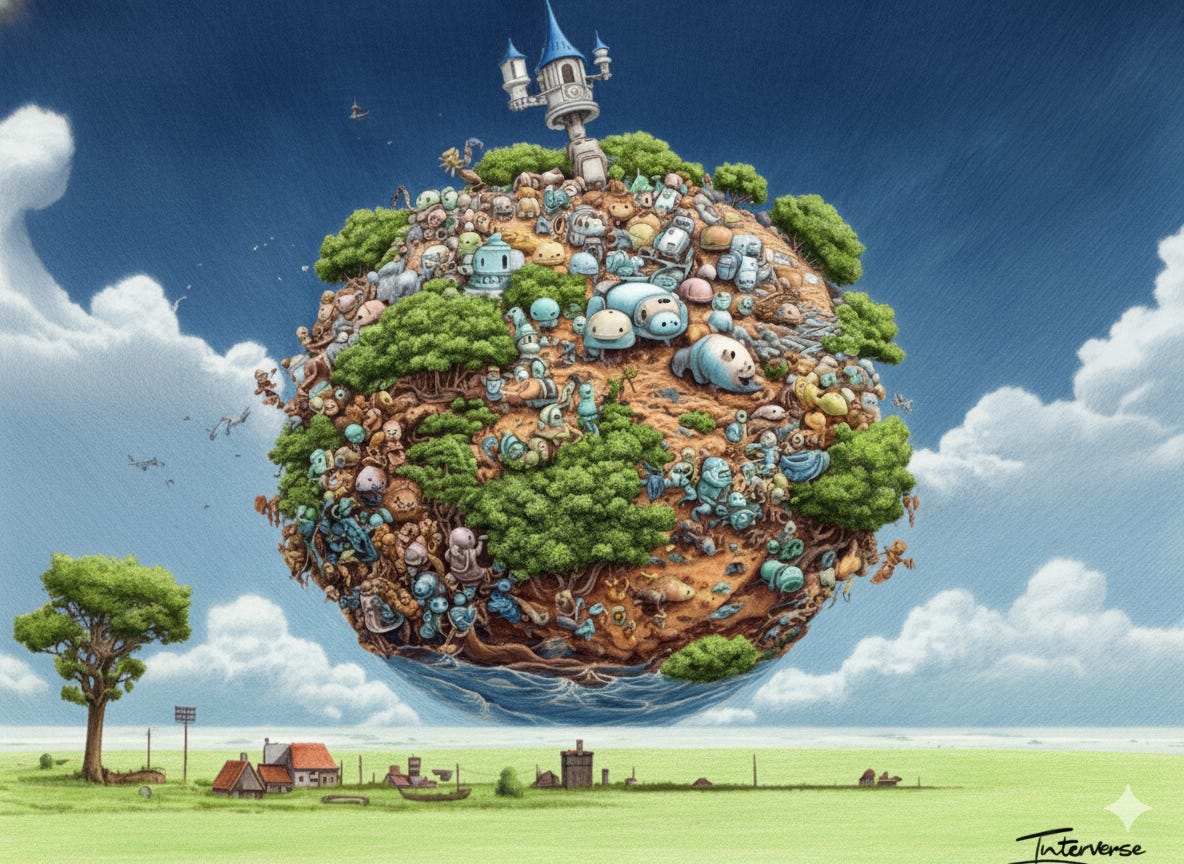

I want to name that world with a term from an earlier essay: the Interverse. If the Metaverse was the technologist’s fantasy of immersion, the Interverse is the humanist’s reality of connection. In the Interverse, computation recedes to the background, like writing or electricity. It becomes the grammar of our collective life - ambient, taken for granted, everywhere and nowhere at once. Near-term, that means a human–human Interverse, a mature, intelligent internet that connects people rather than just machines. Longer-term, it gestures toward an interspecies Interverse, a world where whales, forests, and cities have voices we can hear. Both paths are plausible; both require AI as the enabling substrate.

The Interverse can go dystopian as easily as it can go humane. It inherits the old monsters - platforms, bureaucracies, and markets -that already shape our lives. It’s very hard to predict, especially the future, but it might be possible to specify what becomes possible when intelligence itself becomes infrastructure, and to design institutions for a human and more-than-human world.

10 thoughts follow

1) From shoggoths to scaffolds

A useful starting point comes from Henry Farrell and Cosma Shalizi’s provocation that large language models are not alien invaders so much as familiar monsters, akin to markets and bureaucracies. These systems compress diffuse human knowledge into prices, forms, ballots, and direct action based on that compression. They are vast, impersonal, sometimes indifferent to individual suffering, and yet indispensable for coordination at scale. LLMs are simply the newest members of this family of cognitive machinery. The right question is not, “Will they become our overlords?” but, “How will they interlock with the institutions we already have?”

If models are cultural technologies, then culture changes as we retrofit our newsrooms, schools, hospitals, studios, city halls to incorporate AI. In the short run, the cultural bubble looks like a flood of synthetic text and images; in the medium run, it looks like new routines: fact-checking that is continuous rather than episodic; translation and subtitling by default; collaborative drafts that lower the cost of getting to version 1. In the long run, it looks like ambient general competence, i.e., service-level cognition available when needed, whose value rises and falls with the quality of the institutions that contain it.

2) Prediction as a cultural regime

One reason this shift feels strange is that AI advances under the banner of prediction. Prediction is everywhere in our daily lives: “for you” feeds, estimated arrival times, portfolio signals, health risk flags, model-assisted hiring, promptable assistants that fill the calendar before we ask. A recent argument, mounted from Andreesen Horowitz, so take it with a pinch of salt, suggests that prediction is becoming a successor cultural logic to postmodernism. Whether or not you buy the grand claim, the narrower point is hard to dispute: people now perform identity by making public forecasts and memetic bets; they consume content as anticipatory participation. To be predictive rather than predicted is a posture, even a status claim (should remind you of “If you are not the customer, you’re the product”).

Markets and social media feel the same: always on, always scoring, always about timing. The thing that circulates is not only the work; it is the expectation attached to it. If you view culture this way, the AI bubble isn’t merely hype; it’s a generalized anticipation machine, i.e., an economy where forecasting has become entertainment, and entertainment a bet.

But a culture of prediction is not yet the Interverse; the bridge between them is ubiquitous computing.

3) Weiser’s forgotten wisdom

Mark Weiser’s classic essay, “The Computer for the 21st Century,” announced a deceptively radical idea: the most profound technologies are those that disappear into the background. He called this “embodied virtuality” - not putting people inside simulated worlds like VR, but drawing computation out into the world with people. By pushing computers to the periphery, he argued, we free attention to focus on others and on goals.

This is the Interverse’s cultural posture. The real work is to let AI recede, to fold it into places, practices, and publics until the machines become grammar rather than protagonist. Intelligent culture is not about immersion in the singularity, but relationships with other beings.

Here a fork emerges that mirrors the AGI distinction between General Predictive Intelligence (GPreI) and General Productive Intelligence (GProI) from last week. The US cultural build tends toward the predictive: cloud devices, assistants, knowledge work, media; cognition as a service delivered by data centers to screens and earbuds. The Chinese cultural build tends toward the productive: robots, logistics, smart city fabrics; cognition living in sites and systems. Each path tells a different story of how intelligent culture will be made: as anticipation or as embodiment.

The Interverse must hold both.

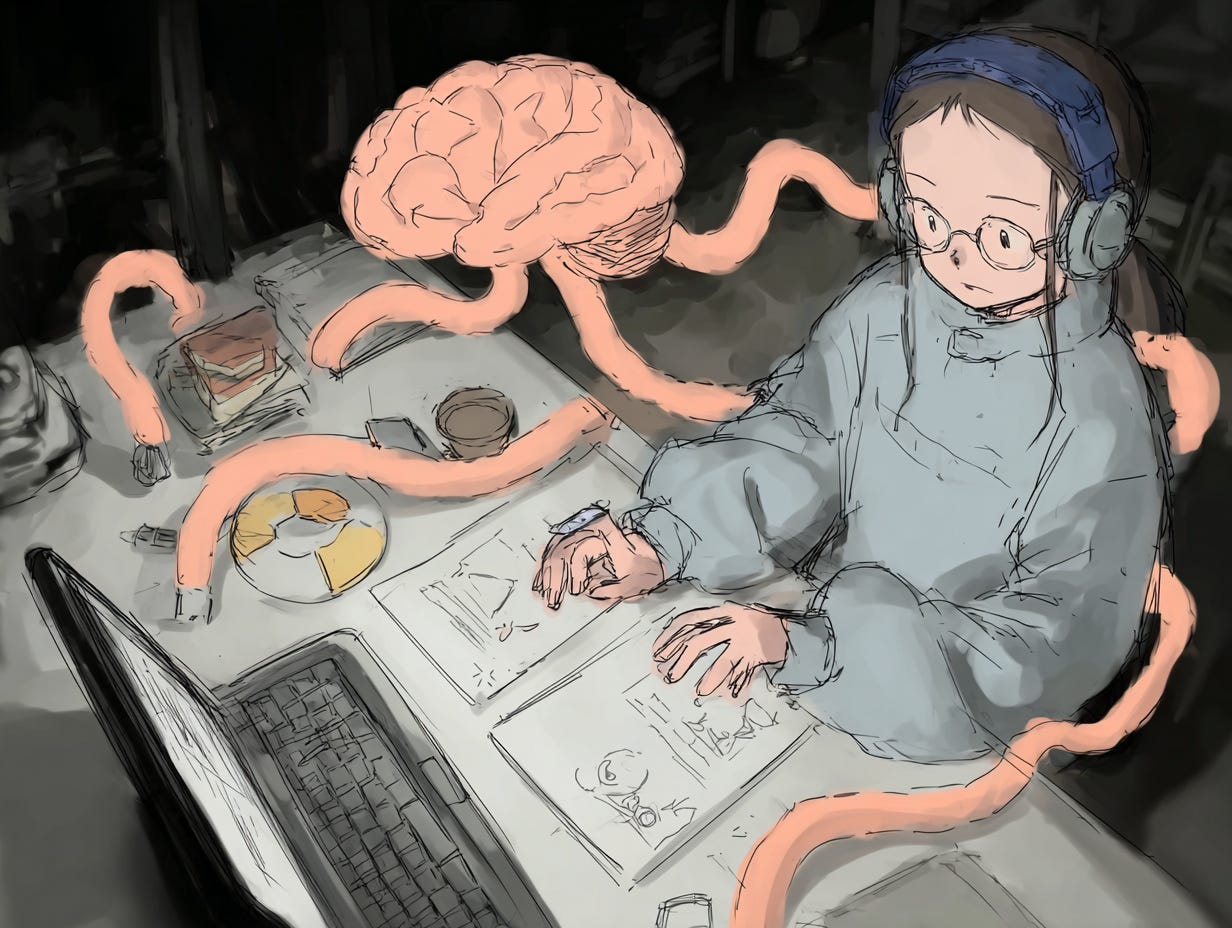

4) The predictive mind and the cultural self

If prediction animates our platforms, it also animates our minds - at least on one influential view. Predictive processing sees the brain as a generative model minimizing error; perception is controlled hallucination, corrected by incoming data. DeepMind’s Generative Query Network demonstrated how a system can infer a 3-D scene from sparse 2-D glimpses by predicting novel viewpoints.

Predictive culture will feel like model alignment at the human scale. We will triangulate the predictions we borrow from machines against the priors we carry in our heads, and those priors will be shaped, in turn, by the scaffolds we inhabit such as schools, feeds, churches, unions, labs. The Interverse’s ethical imperative is to design scaffolds that help us revise our priors in public, with humility: common intelligence to go along with common sense.

5) From human–human to interspecies culture

Culture has always been global, for stories, religious and philosophical beliefs, music and trinkets have traveled the globe for a millennia. However, print culture dramatically accelerated the spread of culture, and novels, radio and movies brought distant people into our lives.

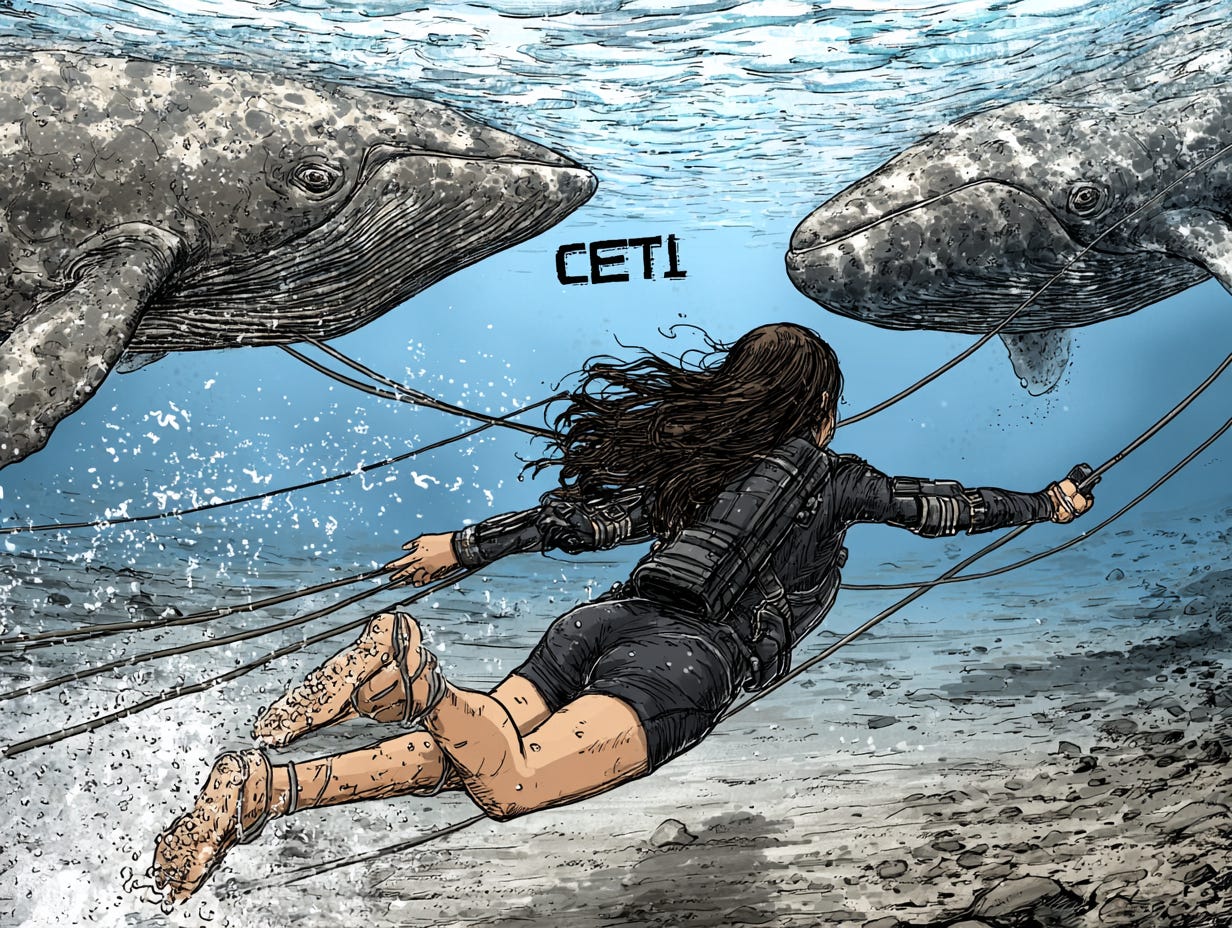

How might AI expand our cultural circle?

The possibility that excites me most is the prospect of bringing non-human animals into our cultural universe. The Cetacean Translation Initiative (CETI) applies machine learning to sperm-whale codas - structured click patterns used in sperm whale social groups - to probe whether they display duality of patterning and other linguistic features. The effort is technically daunting: tagging challenges, massive datasets, distributed stations. Even if it falls short of “full translation,” CETI is a demonstration of the Interverse’s trajectory: AI extends our cultural circle by making other minds legible.

Should we do so though? Will it make it easier for us to exploit animals even more than we do today?

Law and policy might should inform the interverse: personhood debates, habitat rights, ocean governance.

6) Two ways the cultural bubble pops

Bubbles end badly when the thing being sold never had substance. They end productively when exuberance directs investment in infrastructure the next era needs. With AI and culture, two endings are plausible.

Ending A: the Slop Trap. If the economics of attention continue to reward raw engagement, we risk a flood of synthetic slop - cheap content tuned to keep people scrolling, with predictive metrics as judge as well as jury. We’ve seen lesser versions of this for a decade; AI makes it exponential. The cultural bubble then pops into cynicism where the public assumes fakery by default (my sense is that young people already have a ton of this kind of cynicism), and a creator class forced to chase engagement signals, and institutions that privilege simulation from substance (already the way both India and the US are governed today).

Ending B: the Ambient Scaffold. If we push AI into background utilities- translation, retrieval, summarization, simulation, co-drafting, accessibility- and tie them to institutional standards and accountability, the bubble can melt into an Interverse substrate. In that world, independent creators gain leverage; local institutions upgrade their cognitive capacity; publics get tools to compare claims and origins. Inshallah!

We cannot pick B by wishing. We pick it by architecture and protocols: provenance systems, rights frameworks, civic APIs, and procurement rules that favor augmentation over extraction. Weiser’s advice again: push computation to the periphery so people can reappear at the center.

7) The politics of the Interverse

If the Interverse solidifies, it will rewrite who governs the cognitive public realm. A few axes to watch:

Platform power vs. public power. If cultural AI remains concentrated in private stacks, we will outsource agenda-setting to entities optimized for profit. It’s super important to build Public Intelligence! Farrell & Shalizi’s point again: the monsters are ours; they can be governed.

Prediction markets vs. democratic deliberation. If “being predictive” becomes the dominant civic posture, betting could become the primary mode of democratic engagement. But prediction can also assist democracy: scenario tools, budget simulators, participatory planning models, but those can’t be technical tools alone - we will need a culture of imagination that weaves possibilities and prediction together. Speculative Design has a major role to play!

Cloud centralization vs. site embodiment. If cultural AI lives only in the cloud, it defaults to the Brave New Feed. If it also lives in places such as libraries, schools, galleries, parks, studios, culture regains its public character. I can’t overemphasize the importance of embodiment - not (only) in the sense of the Wuhan AI project, but in making AI public through physical presence in the Weiser sense of that term. This is the GPreI/GProI split applied to the arts and everyday life: cognition as feed, or cognition as fabric.

Human exceptionalism vs. more-than-human publics. If we succeed at interspecies communication, however partial, the Interverse will acquire non-human constituencies. That is not science fiction; it is a governance challenge that we should be embracing with all our hearts.

It’s hard to predict the future, and I am mostly of the mind that we should build datacenter overcapacity, align on a strong moral purpose for AI and let the world reveal what it wants, but here’s a brief list of ‘great to haves.’

8) The human–human Interverse

What should we plausibly see at scale?

Cultural memory aids. Public-grade retrieval over local archives, museums, libraries, newsrooms; explainable provenance attached to works.

Civic copilots. Municipal drafting, grant writing, participatory planning with model-assisted scenario tools; translation and accessibility as defaults.

Studio-in-a-browser. Broadcast-quality editing, scoring, and compositing in ordinary devices; collaborative rooms with rights baked in.

Ambient interpretation. On-device captioning, sign-to-speech and speech-to-sign, description for the visually impaired, reading-level adaptation.

Translingual publics. Real-time subtitling across languages for education, performances, town halls.

Each item is technically feasible now; the hard work will be institutional: standards, rights, and the humility to place connection before product.

9) The interspecies Interverse

Here the Interverse leans into “fiction science”—not fantasies, but research trajectories.

Ocean listening stations that stream marine codas as public signal, annotated by models and humans in the loop.

Forest observatories that turn sensor nets into the lively presence of chorusing birds, water tables, tree electrical activity—so land management becomes conversational.

Urban non-human forums - for rivers, air, pollinators, you name it - where models summarize conditions and residents deliberate with more-than-human stakeholders.

Nonhuman minds may never become transparent to us; we may still only pattern-match and predict responses, but with enough data and the right models, other minds become legible enough to matter in public.

10) Omega

In the near future, we will experience a lot of slop and with any luck, some emerging scaffolds. But the deeper arc is one of intelligence becoming infrastructure for everyday life - the lasting transformation of the order of electrification or more. I’ve talked about the Interverse as one imagination of that fully fleshed out cognitive society: the moment when AI lets computation finally do what Weiser promised - move to the background so relations can move to the front.

Farrell and Shalizi remind us not to mystify the moment: we have always lived with monsters of our own making. If the industrial age organized matter, and the first digital age organized information, this next age will organize relations, both human to human, and, if we are lucky and careful, human to more-than-human too. Prediction will be part of it, and so will embodiment and production. The Interverse is the public space in which intelligence disappears into the background so that our shared work of meaning-making can take center stage.